|

|

||

|---|---|---|

| .. | ||

| join_building_data | ||

| planning_data | ||

| screenshot | ||

| __init__.py | ||

| add_new_geometries.sh | ||

| create_building_records.sh | ||

| create_new_building_records.sh | ||

| drop_outside_limit_new_geometries.sh | ||

| drop_outside_limit.sh | ||

| extract_mastermap.sh | ||

| filter_mastermap.py | ||

| filter_transform_mastermap_for_loading.sh | ||

| get_test_polygons.py | ||

| load_geometries.sh | ||

| load_new_geometries.sh | ||

| load_postcodes.sh | ||

| mark_demolitions.sh | ||

| README.md | ||

| requirements.txt | ||

| run_all.sh | ||

| run_clean.sh | ||

Extract, transform and load

The scripts in this directory are used to extract, transform and load (ETL) the core datasets for Colouring London. This README acts as a guide for setting up the Colouring London database with these datasets and updating it.

Contents

- ⬇️ Downloading Ordnance Survey data

- 🐧 Making data available to Ubuntu

- 🌑 Creating a Colouring London database from scratch

- 🌕 Updating the Colouring London database with new OS data

⬇️ Downloading Ordnance Survey data

The building geometries are sourced from Ordnance Survey (OS) MasterMap (Topography Layer).

- Sign up for the Ordnance Survey Data Exploration License. You should receive an e-mail with a link to log in to the platform (this could take up to a week).

- Navigate to https://orders.ordnancesurvey.co.uk/orders and click the button for: ✏️ Order. From here you should be able to click another button to add a product.

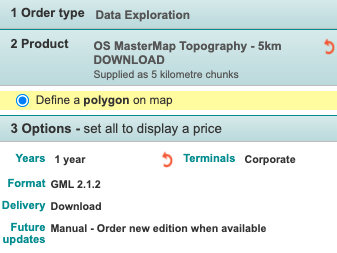

- Drop a rectangle or Polygon over London and make the following selections, clicking the "Add to basket" button for each:

- You should be then able to check out your basket and download the files. Note: there may be multiple

.zipfiles to download for MasterMap due to the size of the dataset. - Unzip the MasterMap

.zipfiles and move all the.gzfiles from each to a single folder in a convenient location. We will use this folder in later steps.

🐧 Making data available to Ubuntu

Before creating or updating a Colouring London database, you'll need to make sure the downloaded OS files are available to the Ubuntu machine where the database is hosted.

If you are using Virtualbox, you could host share folder(s) containing the OS files with the VM (e.g. see these instructions for Mac).

For a production server hosted on a cloud computing platform (e.g. Azure), you could use SCP.

🌑 Creating a Colouring London database from scratch

You should already have set up PostgreSQL and created a database in an Ubuntu environment. If not, follow one of the linked guides: setup dev environment or setup prod environment. Open a terminal in Ubuntu and create the environment variables to use psql if you haven't already:

export PGPASSWORD=<pgpassword>

export PGUSER=<username>

export PGHOST=localhost

export PGDATABASE=<colouringlondondb>

Create the core database tables:

cd ~/colouring-london

psql < migrations/001.core.up.sql

There is some performance benefit to creating indexes after bulk loading data. Otherwise, it's fine to run all the migrations at this point and skip the index creation steps below.

You should already have installed GNU parallel, which is used to speed up loading bulk data.

Move into the etl directory and set execute permission on all scripts.

cd ~/colouring-london/etl

chmod +x *.sh

Extract the MasterMap data (this step could take a while).

sudo ./extract_mastermap.sh /path/to/mastermap_dir

Filter MasterMap 'building' polygons.

sudo ./filter_transform_mastermap_for_loading.sh /path/to/mastermap_dir

Load all geometries. Note: you should ensure that mastermap_dir has permissions that will allow the linux find command to work without using sudo.

./load_geometries.sh /path/to/mastermap_dir

Index geometries.

psql < ../migrations/002.index-geometries.up.sql

Drop geometries outside London boundary.

cd ~/colouring-london/app/public/geometries

ogr2ogr -t_srs EPSG:3857 -f "ESRI Shapefile" boundary.shp boundary-detailed.geojson

cd ~/colouring-london/etl/

./drop_outside_limit.sh ~/colouring-london/app/public/geometries/boundary.shp

Create a building record per outline.

./create_building_records.sh

Run the remaining migrations in ../migrations to create the rest of the database structure.

ls ~/colouring-london/migrations/*.up.sql 2>/dev/null | while read -r migration; do psql < $migration; done;

🌕 Updating the Colouring London database with new OS data

In the Ubuntu environment where the database exists, set up the environment variables to make the following steps simpler.

export PGPASSWORD=<pgpassword>

export PGUSER=<username>

export PGHOST=localhost

export PGDATABASE=<colouringlondondb>

First make sure to run git pull to catch any code changes and run any new database migrations from ../migrations added since the last time the db was updated.

Move into the etl directory and set execute permission on all scripts.

cd ~/colouring-london/etl

chmod +x *.sh

Extract the new MasterMap data (this step could take a while).

sudo ./extract_mastermap.sh /path/to/mastermap_dir

Filter MasterMap 'building' polygons.

sudo ./filter_transform_mastermap_for_loading.sh /path/to/mastermap_dir

Load all new geometries. This step will only load geometries that are not already present (based on the TOID). Note: you should ensure that mastermap_dir has permissions that will allow the linux find command to work without using sudo.

./load_new_geometries.sh /path/to/mastermap_dir

Drop new geometries outside London boundary.

cd ~/colouring-london/app/public/geometries

ogr2ogr -t_srs EPSG:3857 -f "ESRI Shapefile" boundary.shp boundary-detailed.geojson

cd ~/colouring-london/etl/

./drop_outside_limit_new_geometries.sh ~/colouring-london/app/public/geometries/boundary.shp

Add new geometries to existing geometries table.

./add_new_geometries.sh

Create building record to match each new geometry that doesn't already have a linked building.

./create_new_building_records.sh

Mark buildings with geometries not present in the update as demolished.

./mark_demolitions.sh

On a production server

TODO: Update this after PR #794

Run the Colouring London deployment scripts.